What is it?

Imagine you could feed a computer a description of a never-before-seen animal and have it generate a realistic image of what it might look like. That’s the power of generative AI, a type of artificial intelligence that creates new content like like images, music, text, code, and even scientific data based on what it has learned from existing content.

The process of learning from existing content is called training and results in the creation of a statistical model. When given a prompt, a short piece of text that is given to the Large Language Model (LLM) as input, Generative AI uses this statistical model to predict what an expected response might be – and generates new content. Google Bard, Gemini, and OpenAI ChatGPT are examples of Generative AI models.

Is Generative AI the same as Artificial Intelligence?

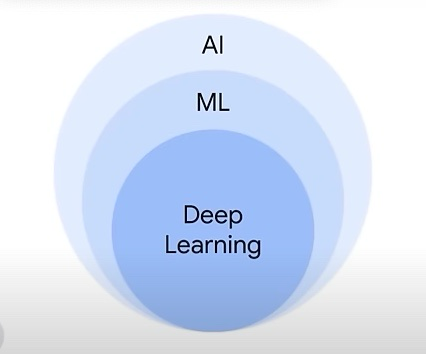

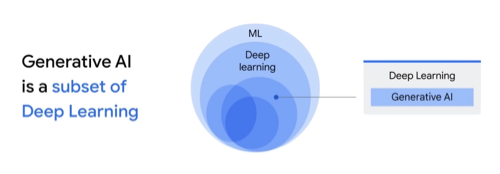

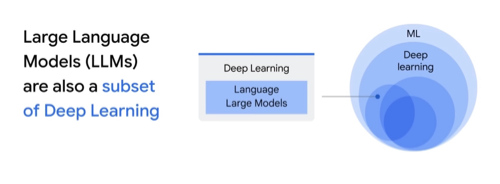

No. Generative AI is a subset of Deep Learning, which is a subset of Machine Learning, which is a subset of the discipline called Artificial Intelligence. Think about the way physics is a discipline and quantum mechanics is a subset of physics.

How does it work?

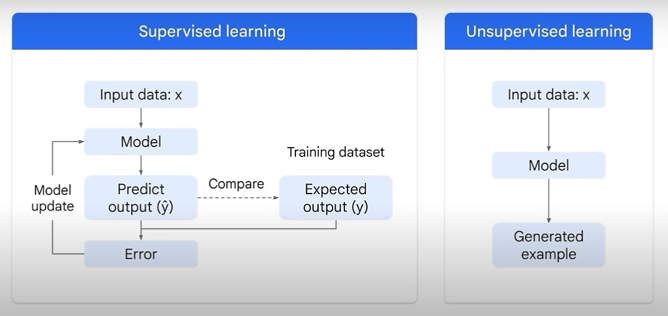

Machine learning (ML) is a program or system that trains a model from input data. That trained model can make useful predictions from the new or never before seen data based on the data used to train the model. Two of the most common classes of machine learning models are:

- Supervised – The data contains labels. For example, tags such as a name, type, or number.

- Unsupervised – Implies the data is not labeled. It is all about looking at raw data and seeing if it naturally falls into groups.

In supervised learning, testing data values are input into the model. The model outputs a prediction and compares that prediction to the training data used to train the model. If the predicted test data values and actual training data values are far apart, that’s called error. The model tries to reduce this error until the predicted and actual values are closer together. This is a classic optimization problem.

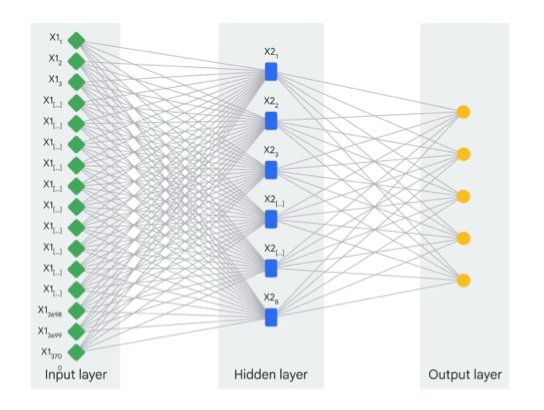

Deep learning is a type of machine learning that uses artificial neural networks, allowing them to process more complex patterns than machine learning. Artificial neural networks are inspired by the human brain. They are made up of many interconnected nodes or neurons that can learn to perform tasks by processing data and making predictions. Deep learning models typically have many layers of neurons, which allows them to learn more complex patterns than traditional machine learning models.

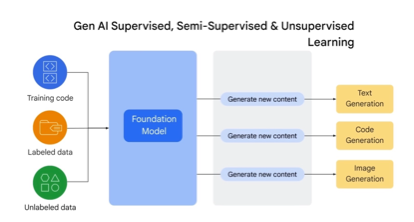

Neural networks can use both labeled and unlabeled data. This is called semi-supervised learning. In semi-supervised learning, a neural network is trained on a small amount of labeled data and a large amount of unlabeled data. The labeled data helps the neural network to learn the basic concepts of the task while the unlabeled data helps the neural network to generalize to new examples.

Large Language Models (LLM) are also a subset of deep learning. LLM’s are one type of generative AI since they generate novel combinations of text in the form of natural sounding language.

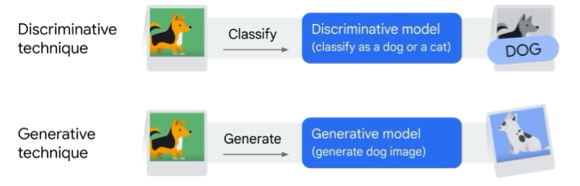

Deep learning models, and machine learning models in general, can be divided into two types:

- Generative – Model used to create new data. A generative model generates new data instances based on a learned probability distribution of existing data. Thus generative models generate new content.

- Discriminative – Model trained on labeled data points. Discriminative models learn the relationship between the features of the data points and the labels. Once a discriminative model is trained, it can be used to predict the label for new data points.

At a high level, the traditional, classical supervised and unsupervised learning process takes training code and label data to build a model. Depending on the use case or problem, the model can give you a prediction. It can classify something or cluster something.

What are the various AI model types?

- text-to-text: Takes a natural language input and produces text output. These models are trained to learn the mapping between a pair of texts (e.g., translation from one language to another).

- text-to-image: This model is trained on a large set of images, each captioned with a short text description. Diffusion is one method used to achieve this.

- text-to-task: This model is trained to perform a specific task or action based on input text. For example, this model could be trained to navigate a web UI or make changes to a document through the UI.

- text-to-video & text-to-3D: This model aims to generate a video representation from text input. Similarly, text-to-3D models generate three-dimensional objects that correspond to a user’s text description (e.g., games or other 3D worlds).

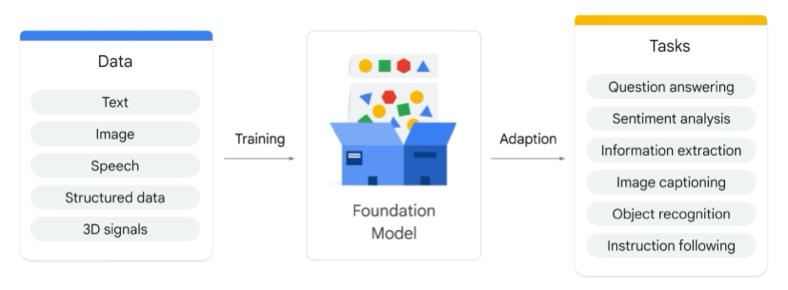

What is a Foundation Model?

A foundation model is a large AI model pre-trained on a vast quantity of data designed to be adapted or fine tuned to a wide range of downstream tasks, such as sentiment analysis, image captioning, and object recognition. Foundation models have the potential to revolutionize many industries, including health care, finance, and customer service.

Real world example

- Hospital buys a pre-trained Large Language Model from OpenAI, Meta, or Google.

- Hospital fine-tunes the Large Language Model with its own specific data.

- Hospital uses the Large Language Model to increase predictive accuracy in detecting adverse events.

The future of Generative AI

Generative AI is still in relatively early stages of development, but it has the potential to change the way we live, work, and learn. As the technology continues to evolve, we can expect to see even more innovative and groundbreaking applications emerge.